Concurrency

Concurrency

Dispatchers ensure process isolation.

Allocate time to run the thread.

It’s ‘running’ because the thread started and didn’t end.

There is one core, but multiple threads are ‘running’, ensuring concurrency.

Unlike threads, memory cannot be divided over time.

Memory is limited and allocated by dividing it into spaces.

The OS swindles the process that it monopolizes all resources, but in reality,

resources are limited and used by everyone.

Traffic arrangement is necessary when trying to take limited resources with each other.

Limited resources should be mutual exclusion. And you have to synchronize what you use.

It is necessary to ensure concurrency.

multiple process

OS design is concerned with the management of processes and threads:

- Multi programming

- Multi processing (multi core)

- Distributed Processing (Multiple computers are connected by a network, etc)

`clustering

Terms of Concurrency

atomic operation :

Operations that do not lose execution permission in the middle when certain conditions are present.

critical section :

The protected sections.

race condition :

The state of trying to use resources with each other.

mutual exclusion :

Using resources entirely alone.

deadlock :

The status of waiting for the required resources. And if that resource remains occupied by another process.

A state of being unable to do anything after all.

livelock :

In a state such as a deadlock, But The program is in operation(?)

starvation :

A state in which certain resources are required but are not assigned.

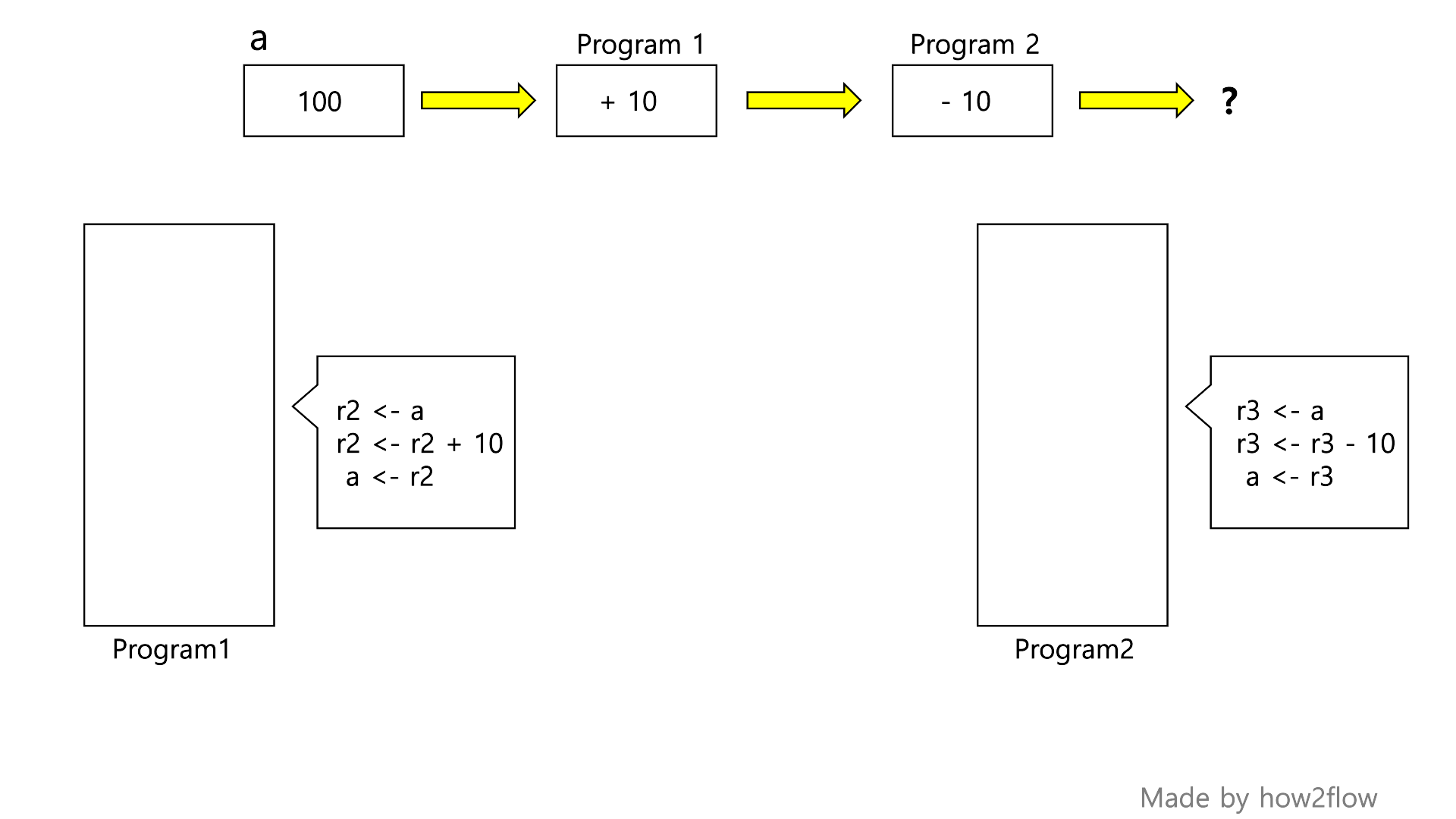

Suppose you have two programs.

It is written by C.

static int a = 100;

int Program1() {

a = a + 10;

return a

}

int Program2() {

a = a - 10;

return a

}

...

Program1();

Program2();

...

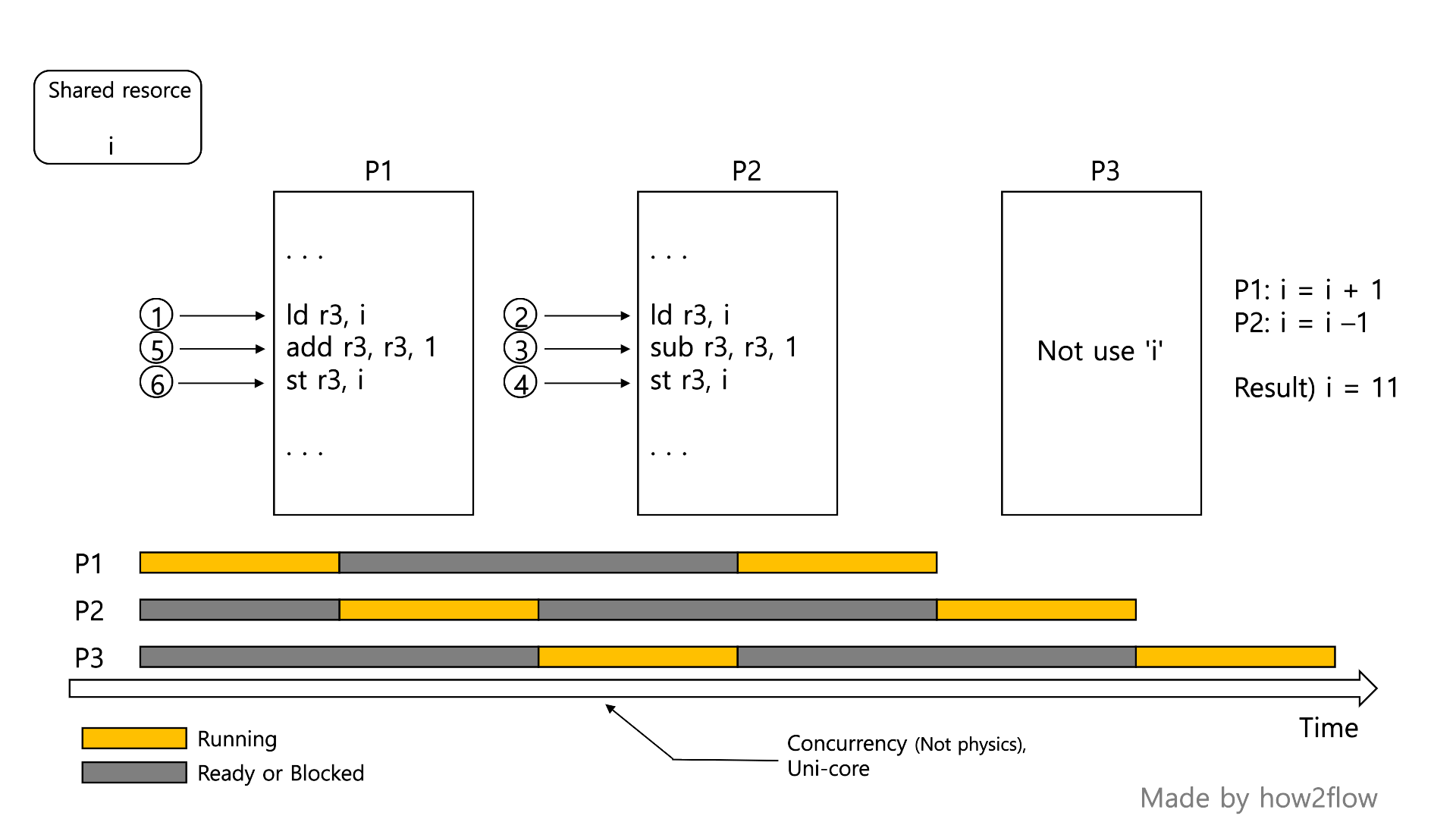

It is assumed that concurrency is guaranteed by time quantum of time.

Of course, C Program is eventually converted into assembly instructions.

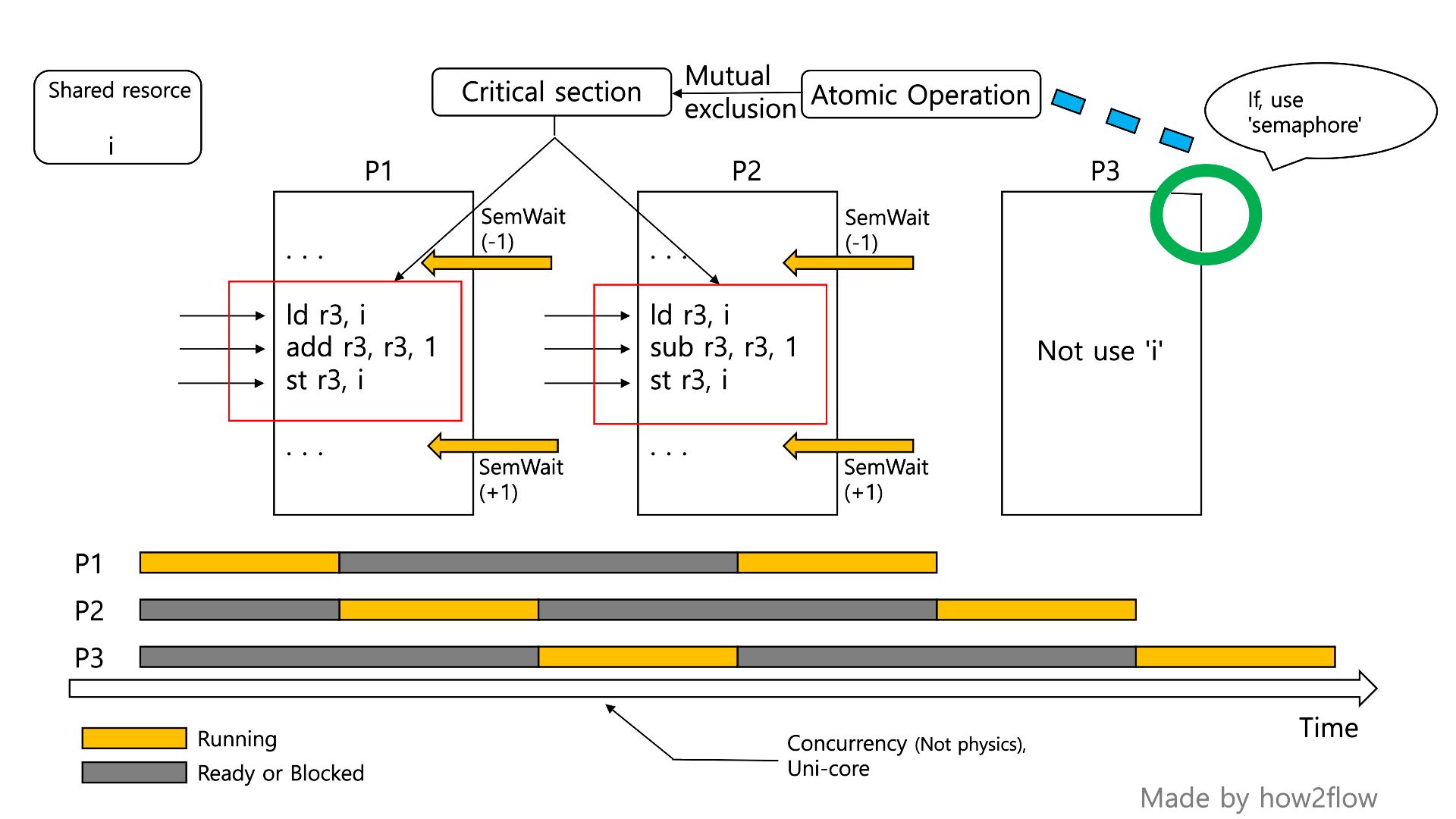

picture [1] Atomic Operation 1

When two processes are completed, the result should be 100.

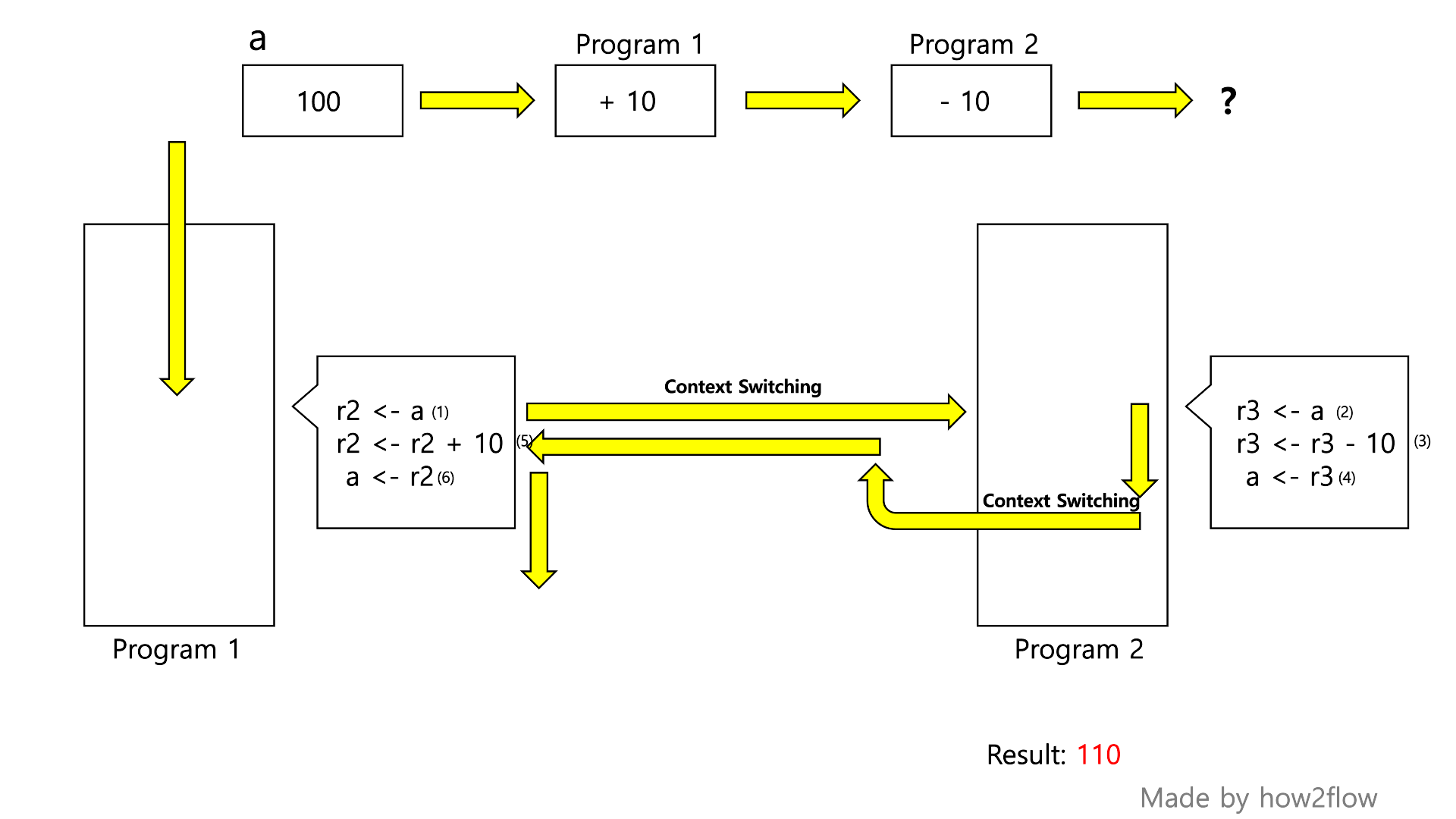

However, if context switching occurs as follows, the result will be different.

picture [2] Atomic Operation 2

So This case needs mutual exclusion .

The exclusion use of a should be guaranteed.

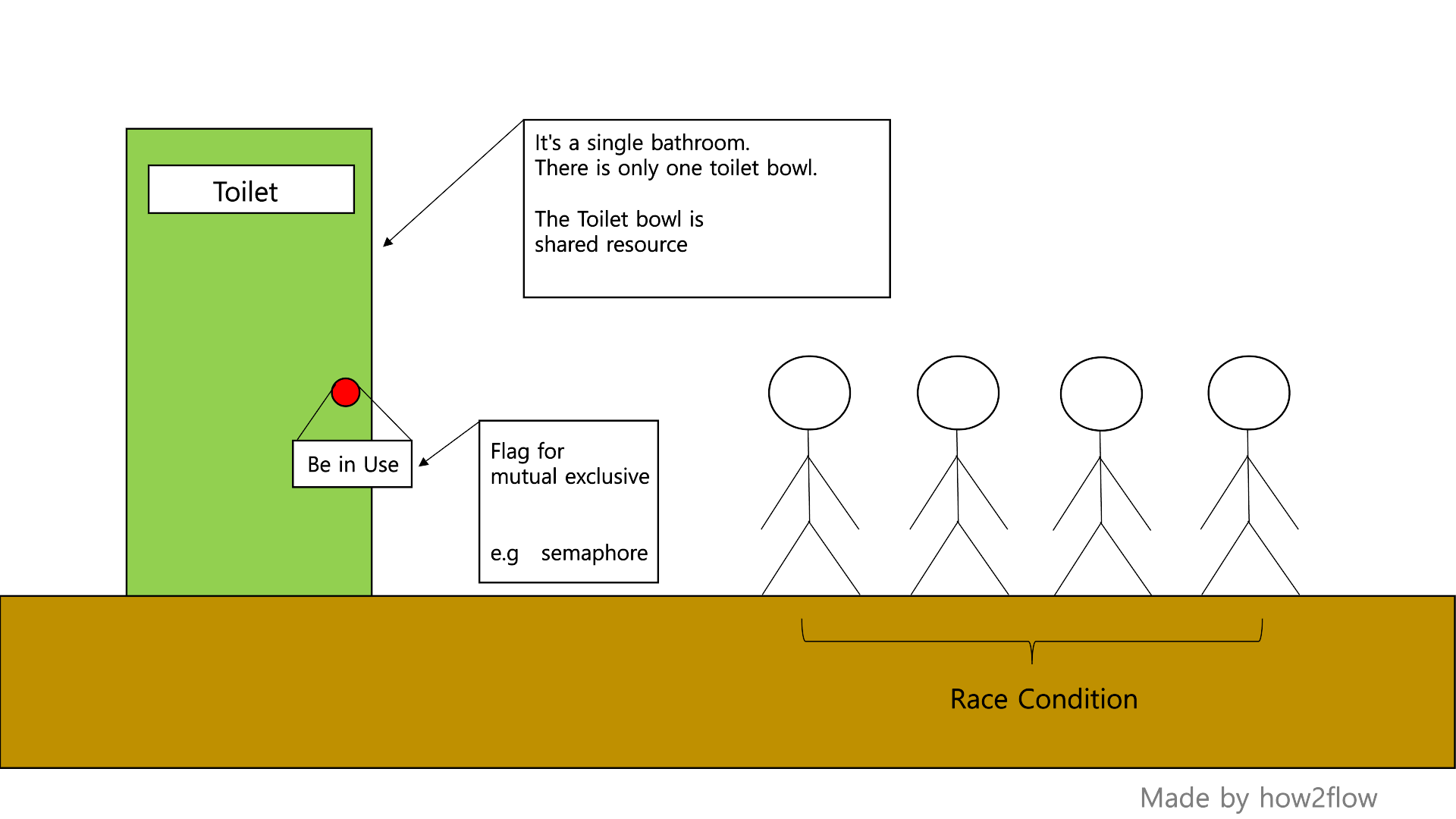

Here’s an example.

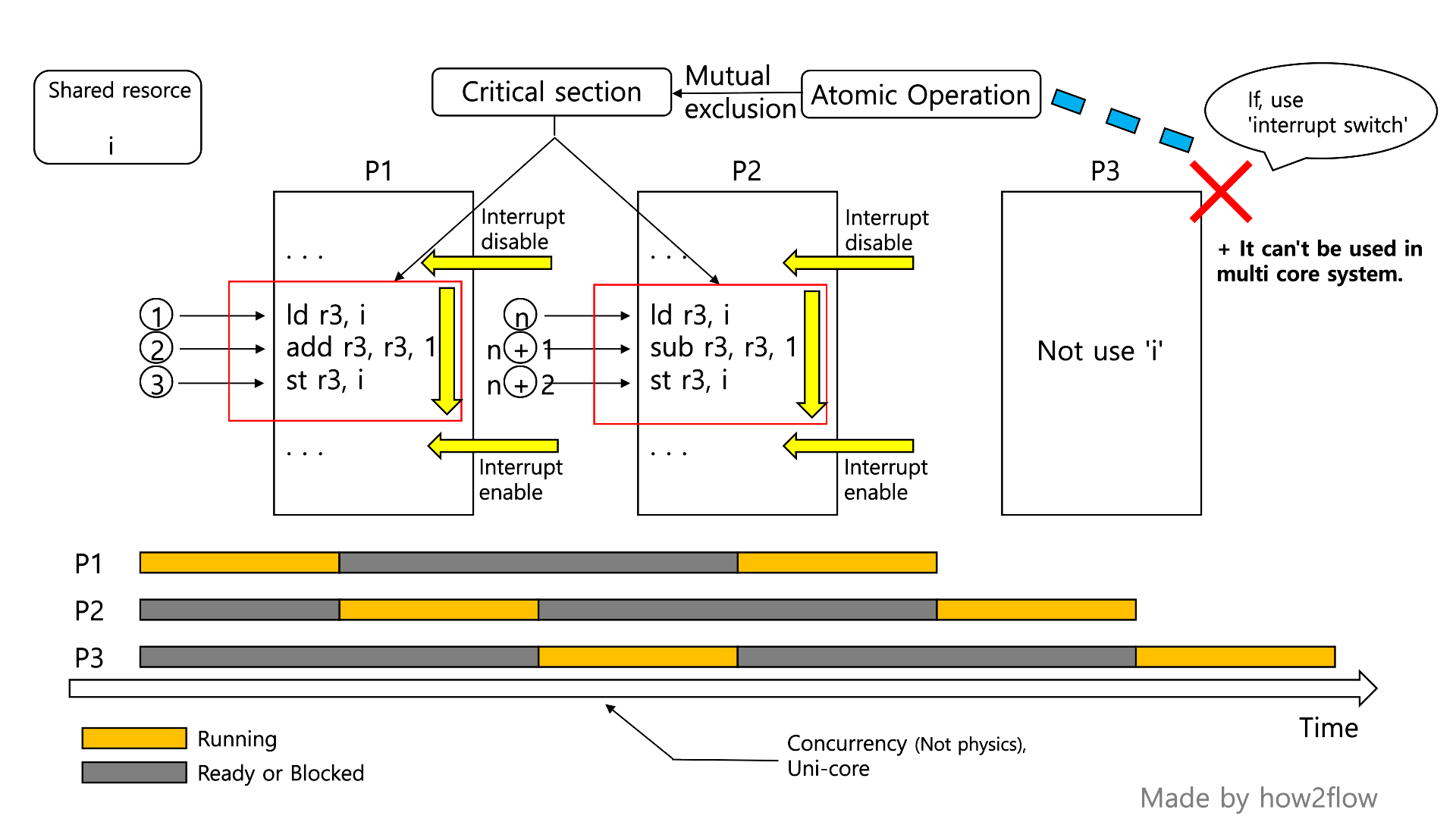

picture [3] Mutual Exclusion

There is only one toilet in the park, so it is assumed that it is for one person only.

But if multiple people have to go to the bathroom, what should we do?

Here, people correspond to the process, and the toilet bowl in the bathroom corresponds to a shared resource.

What if someone opens the door when you poop?

Like you’re not supposed to go into the bathroom when someone’s using it,

When a process uses shared resources, it should not compete with shared resources.

Like hanging a sign while using the toilet and putting it down when you’re done using it,

Raise the flag when the process uses shared resources and lower the flag when it is finished.

The flag is usually used as a variable, and an example is a ‘semaphore’

The problem with ensuring this mutual exclusion is that there are different types and

numbers of shared resources, processes, and the shared resources required by each process.

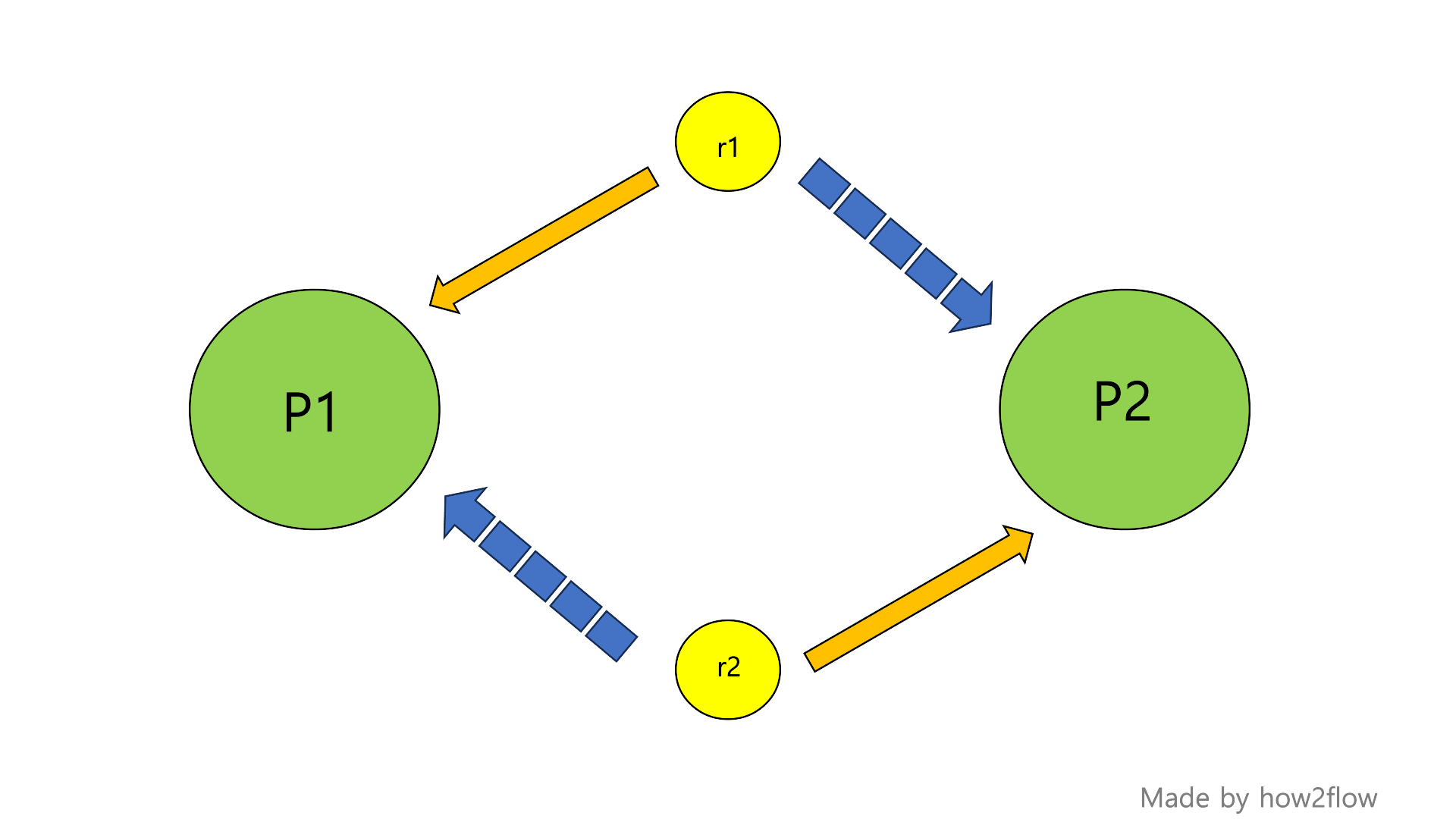

I’ll give you an example.

Process 1 requires resource 1 and resource 2, and process 2 also requires resource 1 and resource 2,

and when resource 1 is allocated to process 1 and resource 2 is allocated to process 2, its own resource has other resources.

Process 1 and process 2 are not executed forever.

picture [4] Dead Lock

Such a difficult situation can come.

A state in which processes are just waiting for each other and nothing is executed is called a deadlock .

If you prioritize a process separately to resolve the problem, some processes may never be allocated resources.

It’s called starvation .

summary

The state in which there are shared resources and various processes want to use each other is called race condition .

In order to solve the race condition , the critical section should be made into an atomic operation .

It is said to support mutual exclusion .

When mutual exclusion is guaranteed, a deadlock occurs that is waiting for the other party’s work to be completed.

If you give priority to resolve deadlocks, A process that continues to fall behind priorities and is unable to allocate resources is called starvation .

Principles of Concurrency

Interleaving and overlapping.

Uniprocessor - the relative speed of execution of processes can’t be predicted.

Uni - processor : (Interleaving only)

| P 1 | --- | P 1 | --- |

| --- | P 2 | --- | --- | ...

| --- | --- | --- | P 3 |

Multi - processor : (Interleaving + Overlapping)

| P 1 | --- | P 1 | --- |

| --- | P 2 | --- | --- | ...

| --- | --- | --- | P 3 |

`-----`-----`-----`-----` ...

`-----`-----`-----`-----`

`-----`-----`-----`-----` ...

`-----`-----`-----`-----`

overlapping :

Since there are multiple cores, different processes are physically executed at the same time.

Difficulties of Concurrency

Considerations when ensuring concurrency.

- Sharing of global resources.

- Difficult for the OS to manage the allocation of resources optimally.

- Difficult to locate programming errors as result are not deterministic and reproducible.

- It may be a problem of its own, but it is also related to competition with other processes.

Race Condition

multiple process or threads read and write data items.

The final result depends on the order of execution.

Resource allocation should not be timed.

If you adjust the execution speed, it should depend on the user’s computer and how many processes there are.

So it should never be solved by timing.

Process Interaction

| Degree of Awareness | Relationship | Potential Problems |

|---|---|---|

| Unaware of each other | Competition | - Mutual exclusion - Deadlock - Starvation |

| Indirect aware (shared object) |

Competition by sharing | - Mutual exclusion - Deadlock - Starvation - Data coherence |

| Directly aware | Cooperation by communication | - Deadlock - Starvation |

Requirements for Mutual Exclusion

- Must be enforced.

- A process that halts must do so without interfering with other processes

- No deadlock

- No starvation

- A process must not be denied access to a critical section when there is no toher process using it.

- No assumptions are made about relative process speeds or number of prcesses.

- A process remains inside its critical section for a finite time only.

Mutual Exclusion Implementing

Hardware Support

Atomic operations shall be implemented in critical sections.

blocking interrupt

Turn off interrupt before entering critical section and turn on interrupt when critical section is finished.

However, processes that are not related to shared resources are all put on waiting.

In addition, even if it is a process that uses shared resources, threads before using resources are also in a waiting state.

It is very inefficient.

When the interrupt is turned off, it does not work on the multiprocessor.

Compare & Swap Instruction

also called a compare and exchange instruction .

first, make a flag and make two simple code.

Assume that it enters the foo function and is called at the end of the bar function.

/* Program 1 */

1 int flag;

2

3 foo() { // enter

4 while (flag == 0);

5 flag = 0;

6 }

7

8 bar() { // exit

9 flag = 1;

10 }

/* Program 2 */

1 int flag;

2

3 foo() { // enter

4 while (flag == 0);

5 flag = 0;

6 }

7

8 bar() { // exit

9 flag = 1;

10 }

It looks like it’s been implemented,

If there is an interruption at line 4, and context switching occurs,

Both programs will enter the critical section.

You can also blocking interrupt line 4 up and down,

but it has become a little more efficient from the initial description,

and the problem remains the same.

So there is a command that supports reading and writing flag values at once.

That’s what a compare and swap is .

1 /* program mutualexclusion */

2 const int n = /* number of processes */;

3 int flag; /* 0: allow 1: disallow */

4

5 void foo(int i) {

6 while (true) {

7 while (compare_and_swap(&flag, 0, 1) == 1); /* read & write at once */

8 /* critical section */

9 flag = 0;

10 /* remainder */

11 }

12 }

void main() {

flag = 0;

parbegin (P(1), P(2), ... , P(n));

}

another way is exchange .

1 /* program mutualexclusion */

2 const int n = /* number of processes */;

3 int flag;

4

5 void bar(int i) {

6 while (true) {

7 int keyi = 1;

8 do exchange (&keyi, &flag)

9 while (keyi != 0);

10 /* critical section */

11 flag = 0;

12 /* remainder */

13 }

14 }

15

16 void main() {

17 flag = 0;

18 parbegin (P(1), P(2), ... , P(n));

19 }

advantages & disadvantages

advantages

- Applicable to any number of processes on either a uni or multi.

- Simple and easy to verfy.

- It can be used to support mulitple critical sections by its own variable.

disadvantages

- Busy waiting

- Starvation

- Deadlock

Ways of Mutual Exclusion

| Name | Info |

|---|---|

| Semaphore | general |

| Binary Semaphore | Use Binary Semapore var |

| Mutex | Lock & unlock only in one process. |

| Condition Variable | support by program language |

| Monitor | support by program language |

| Event Flags | |

| Maliboxes/Messages | synchronize by communication |

| Spinlocks | use in multiprocessor |

Semaphore

It is variable. type is integer.

The value of the semaphore is the number of accessible processes.

It has some operations.

- Initialize to a nonnegative integer value

- Use semWait # decrement

- Use semSignal # increment

example of Semaphore

1 struct semaphore {

2 int count;

3 queueType queue; // FIFO

4 };

5

6 void semWait(semaphore s) {

7 s.count--;

8 if (s.count < 0) {

9 /* place this process in s.queue */

10 /* block this process */

11 }

12 }

13

14 void semSignal(semaphore s) {

15 s.count++;

16 if (s.count <= 0) {

17 /* remove a process from s.queue */

18 /* place process on ready list */

19 }

20 }

The fact that Semaphore’s value has become negative means that it is impossible to enter.

The negative value of the semaphore is the number of blocked because they did not enter.

When a semaphore signal is called and one process exits the critical section,

The first (or higher priority) process that is blocked is caught in the ready queue.

example of Binary Semaphore

1 struct binary_semaphore {

2 int value = 0;

3 queueType queue;

4 };

5

6 void semWait(binary_semaphore s) {

7 if (s.value == 1)

8 s.value = 0;

9 else {

10 /* place this process in s.queue */

11 /* block this process */

12 }

13 }

14

15 void semSignal(binary_semaphore s) {

16 if (s.queue is empty())

17 s.value = 1;

18 else {

19 /* remove a process from s.queue */

20 /* place process on ready list */

21 }

22 }

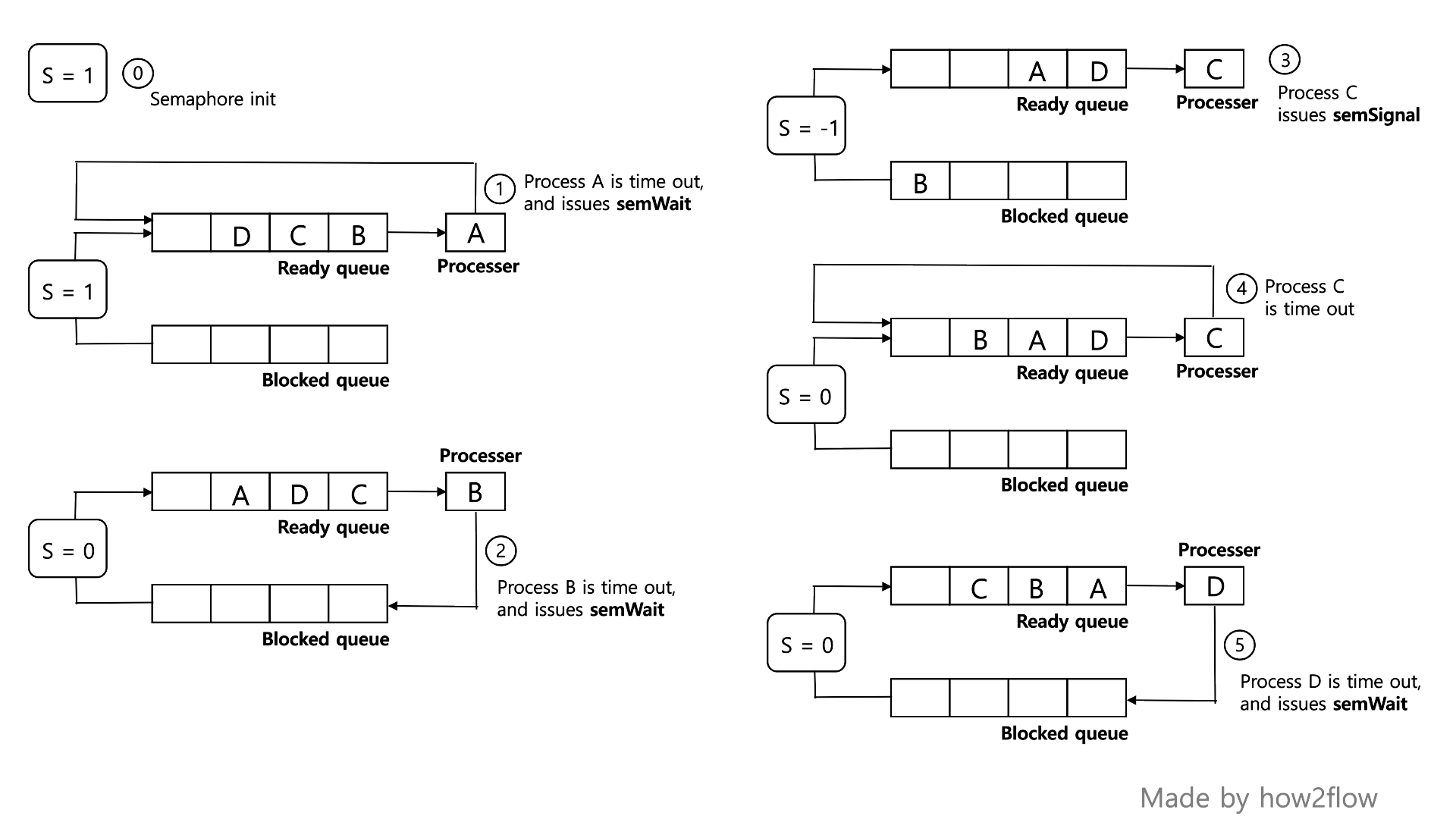

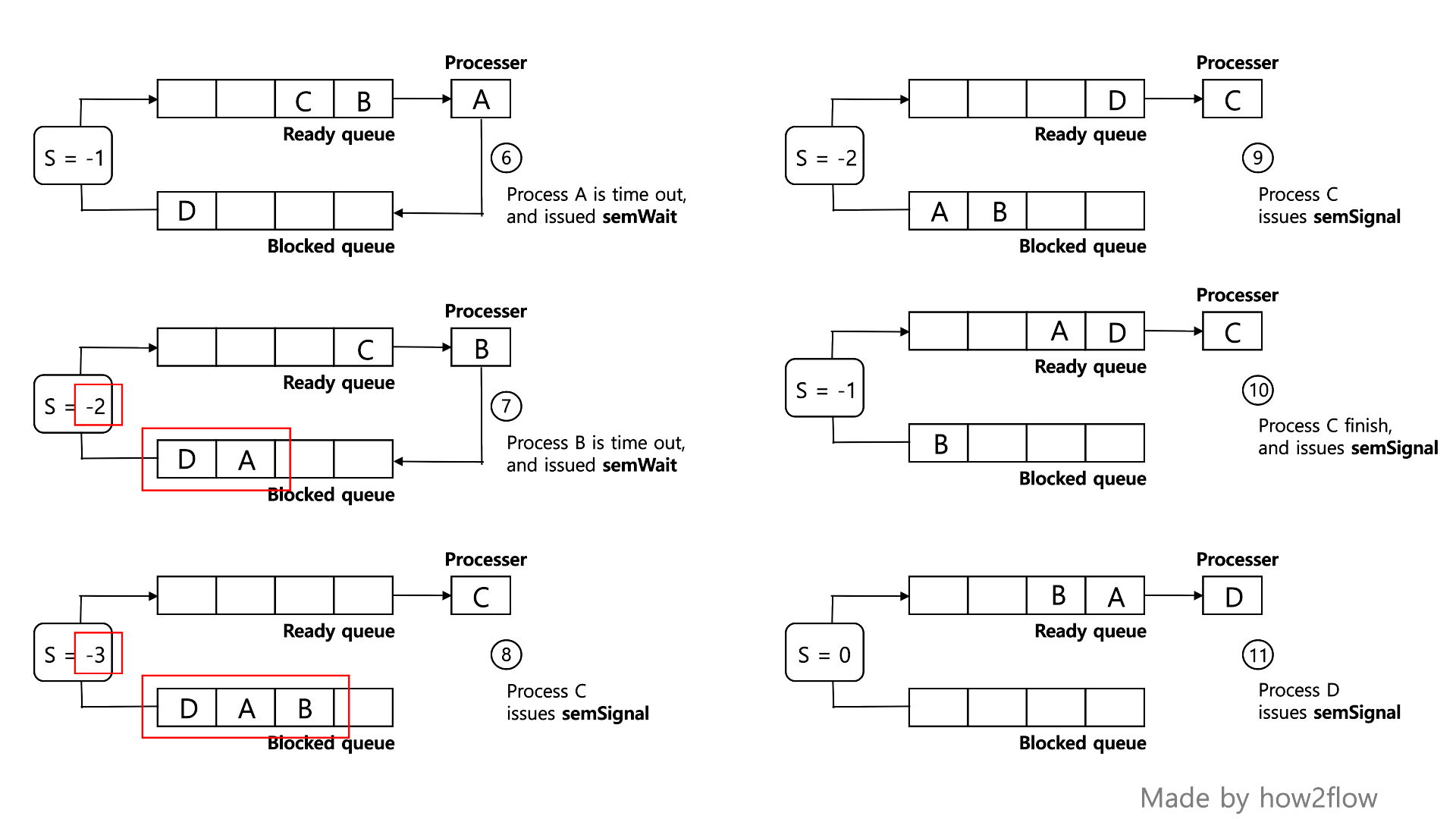

examples of Semaphore Machanism

It is an image representing a semaphore operation.

It can be seen that the state of the process changes

while ensuring mutual exclusion as shared resources are used and access to critical sections.

picture [5-1] Semaphore Queue 1

picture [5-2] Semaphore Queue 2

The semaphore variable value is equal to the number of processes waiting in the blocked queue.

It may not be a unicore system, or it may vary depending on the situation.

semaphore vs blocking interrupt

I assume that there is a process that performs +1 and -1 on the shared resource,

and the result is a program that wants the same value as the first one.

Let’s compare inter-exclusion with blocking interrupt and semaphore.

picture [6-1] No ensure mutual exclsion

picture [6-2] Blocking Interrupt

picture [6-3] Using Semaphore

Blocking interrupts requires waiting for processes that are not related to shared resources when a process approaches a critical section.

It’s inefficient. Above all, it cannot be used in multi-core systems.

Semapore does not block interruptions,

so it is free to switch processes and can be applied to multi-core systems.

ETC

A system example that guarantees automatic-exclusive and synchronization like semaphores.

Monitors

Programming language constrcut that provides equivalent functionality

to that of semaphores and is easier to control.

Program languages

Pascal, Pascal-Plus, Modula-2, Modula-3, and Java

Characteristics

Local data vars are accessible only by the Monitor’s procedures and not by any external procedure.

Process enters monitor by invoking one of its procedures(functions).

Only one process may be executing in the monitor at a time.

synchronization

Achieved by the use of Condition variables that are combined within the monitor and accessible only within the monitor.

Condition variables are operated on by two functions:

cwait(c): suspend execution of the calling process on condition c.

csignal(c): resume execution of some process blocked after a cwait on the same condition.

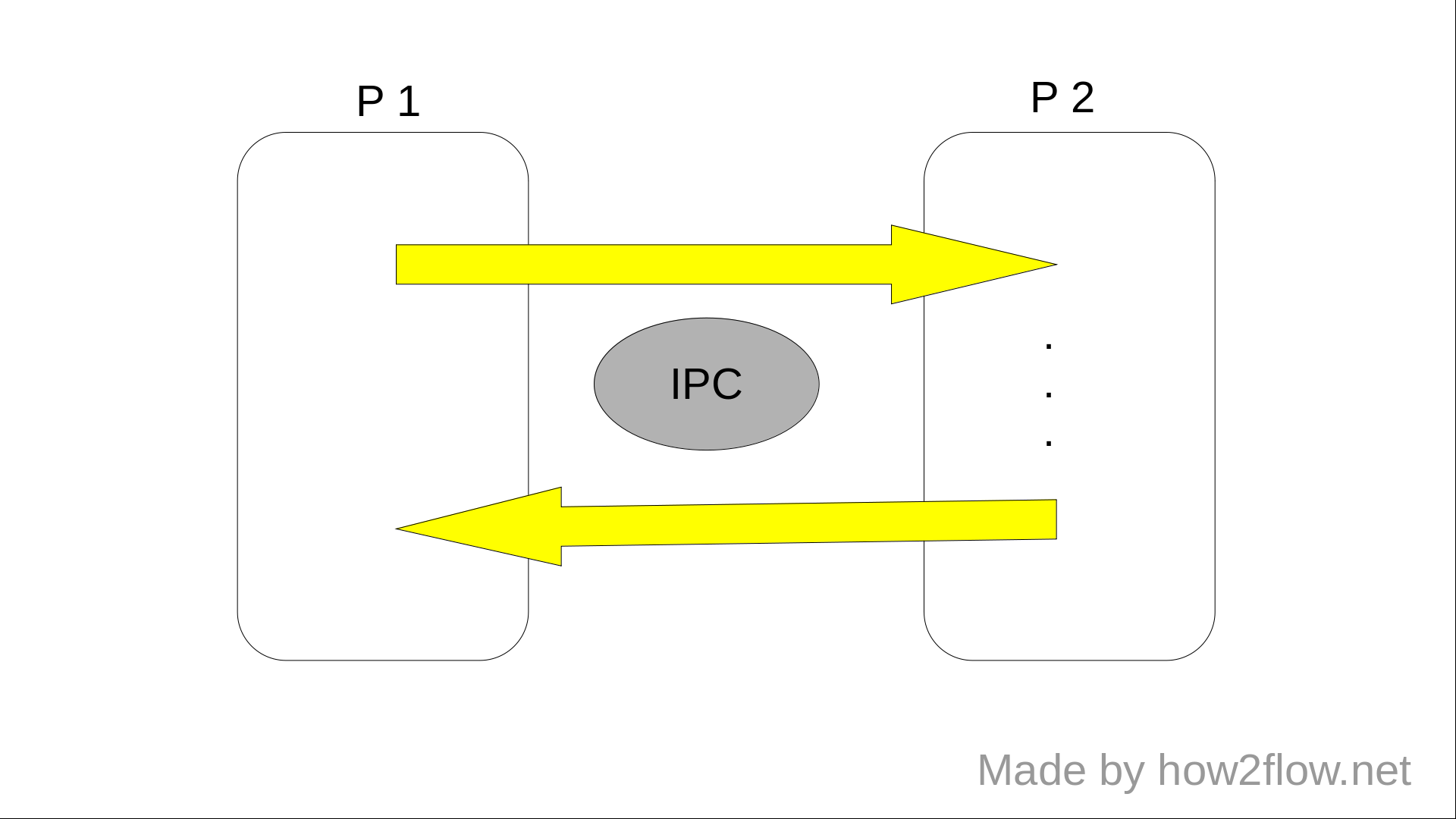

Message Passing

Direct communication between processes with IPC .

picture [7] Message Passing

works with Distributed systems and shared memory multiprocessor and Uniprocessor systems .

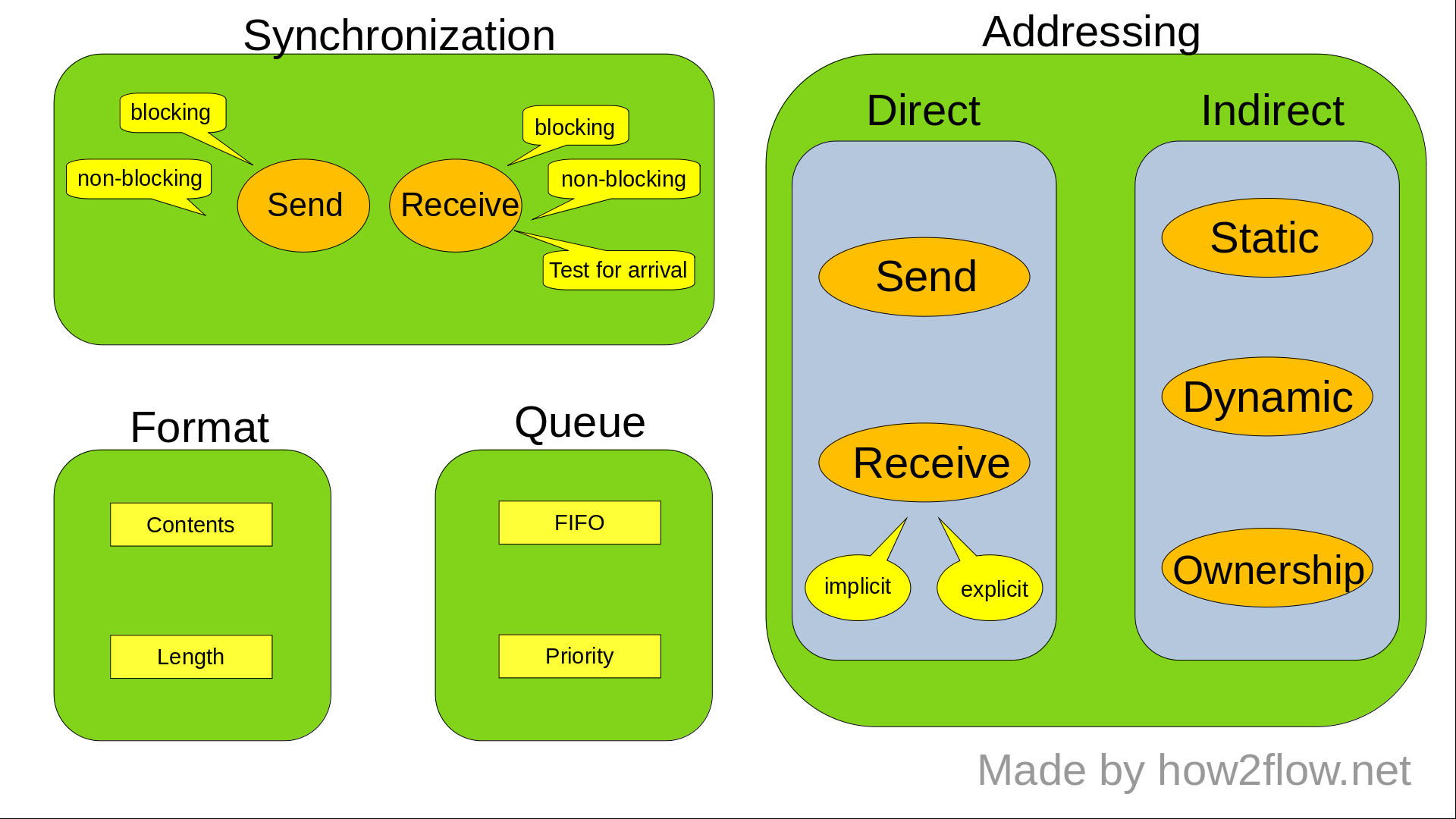

format

The actual function is normally provided in the form of a pair of primitives:

send(destination, message)

receive(source, message)

picture [8] Message Format

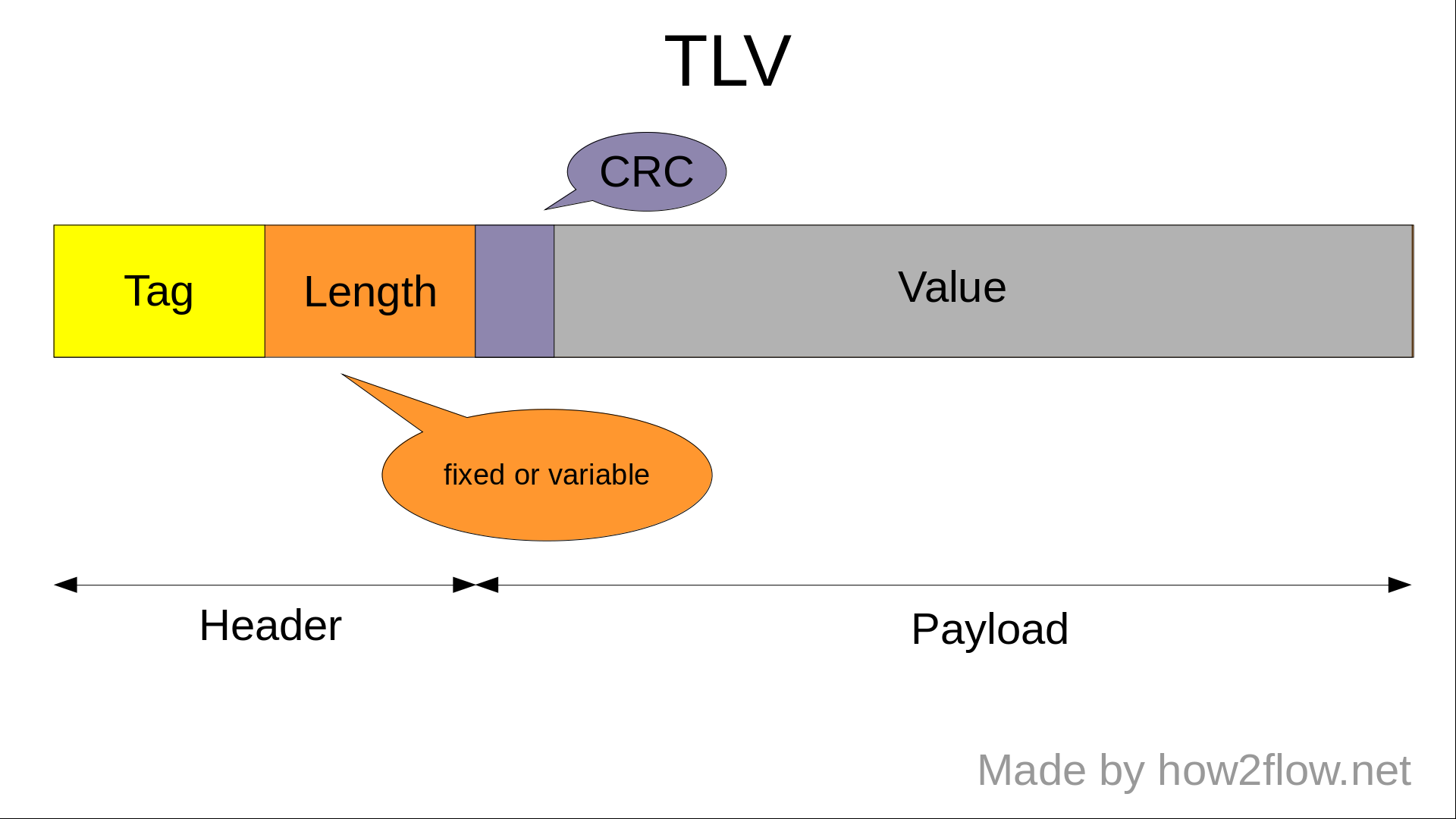

Message Passing are exchanged in TLV format after the source and destination addresses are determined.

picture [9] TLV

There are fixed and variable message lengths.

It is usually used variable types because processes don’t know the length of messages that are actually exchanged.

CRC is optional.

addressing

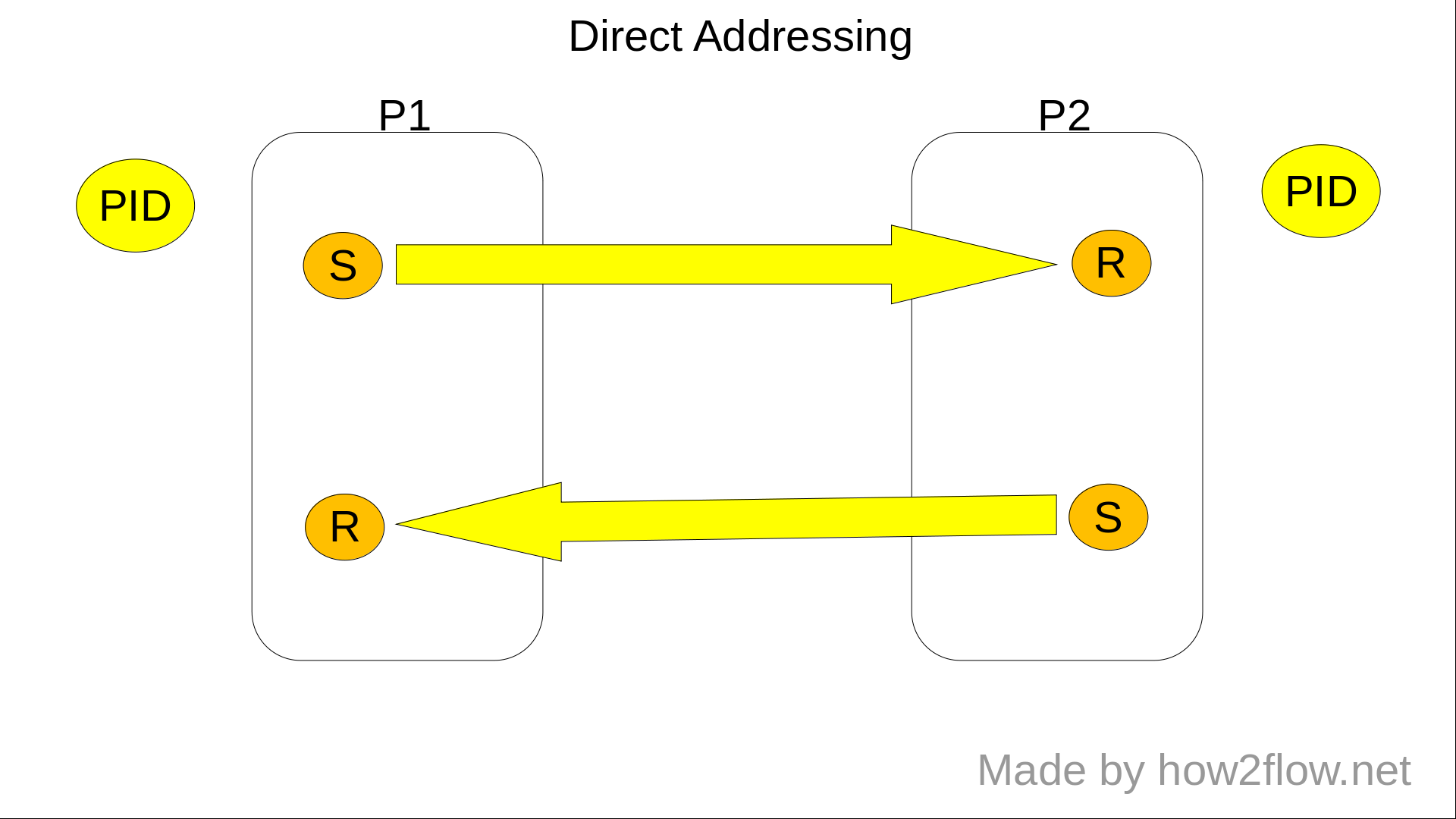

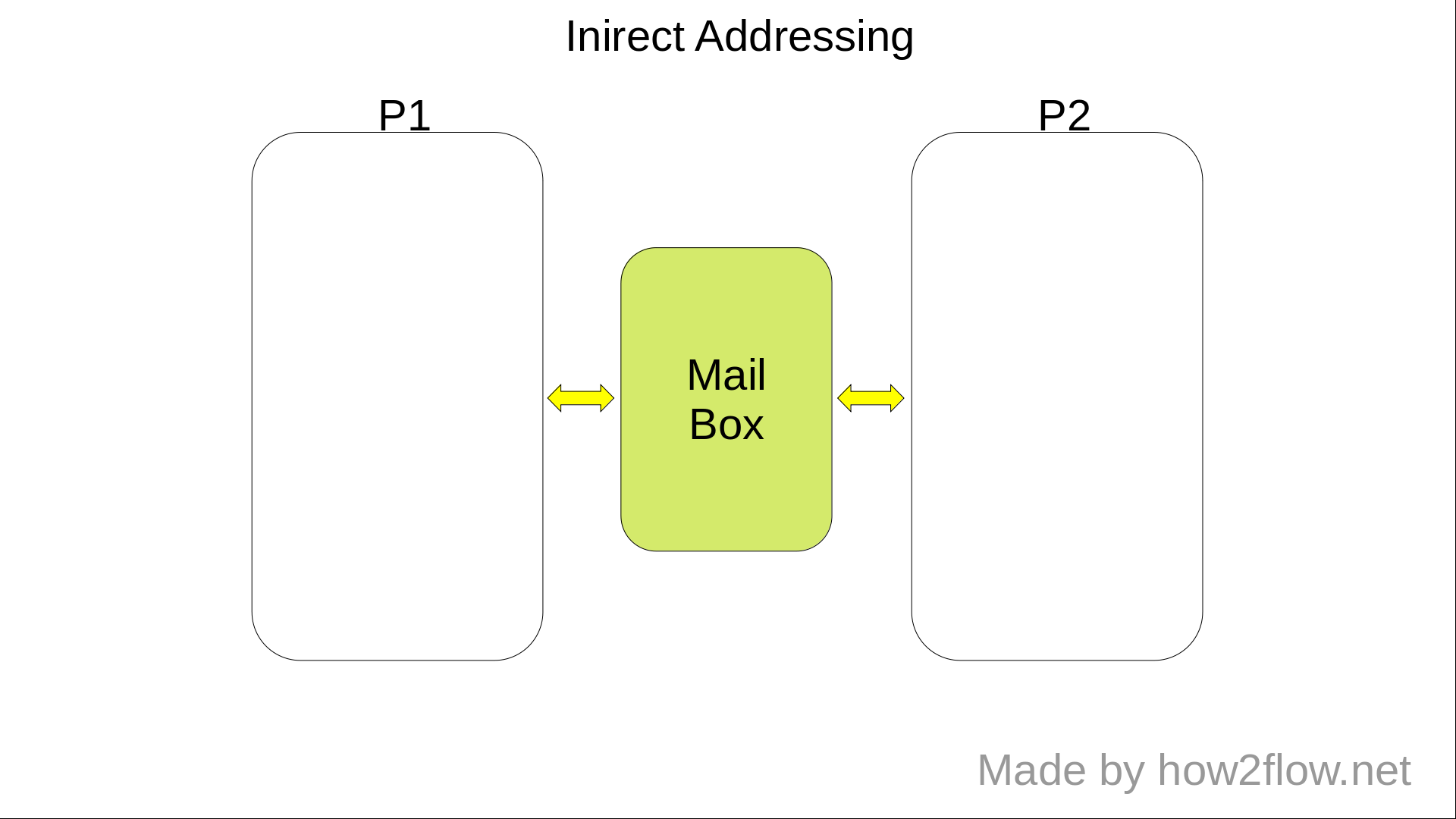

There are two types of addressing: direct and indirect.

picture [10] addressing

The direct format is a method of designating and using a send/receive process with a PID, etc.

The redirect format is used in broadcasts that are sprayed to an unspecified number of people, or in systems that send messages and do not require feedback, etc.

mailbox-code

This is an example of mutualexclusion with mailbox.

1 const int n = /* number of processes */

2 void P(int i) {

3 message msg;

4 while (true) {

5 receive (box, msg);

6 /* critical section */

7 send (box, msg);

8 /* remainder */

9 }

10 void main() {

11 create_mailbox (box);

12 send(box, null);

13 parbegin (P(1), P(2), P(3), ..., P(n));

14 }

}

Leave a comment